Introduction

Amazon Elasticsearch is an AWS managed full text search and analytics engine supported by open source Elasticsearch API. It collects huge amount of structured and unstructured data and index them based on user specified mappings and makes it searchable using rich and powerful RESTful APIs in near real time. Elasticsearch actually uses Inverted Index that maps each unique word to documents containing that word. This allows fast discovery of documents in large datasets

Key Terminologies

Document

A document is a basic unit of storage in Elasticsearch. Each document a JSON object with keys and respective values.

Mappings

Mapping provides schema for the document that contains fields and their data types.

Index

An Index in Elasticsearch can be compared to a database in RDBMS. An index is nothing but collection of documents with similar characteristics. An index has a unique name that can be used to search, update and delete operations in the cluster.

Shard

Every index is spread across multiple pieces call shards. When you create an index, you can decide how many shards do you need and accordingly the index is split across available shards, also called as primary shards.

Replica

As a backup in case of failure and to improve search performance, each primary shard can have 0-N replicas. Number of replica are assigned at the time of index creation. If you have one node in your cluster then you can set replica count as 0.

Node

There are different types of node

- Master Node Master nodes does the job of configuration and management of entire cluster.

- Data Nodes Data Node actually stores the data and perform search and aggregation operations.

Cluster

A collection of one or more nodes a called as cluster. It automatically assigns a master in the cluster and reorganizes itself to handle the incoming data. A cluster can be in one of the three states indicating the health of the cluster.

- Green : the cluster is in healthy state

- Yellow : replica shards for at least one index aren't allocated to nodes.

- Red : at least one primary shard and its replicas aren't allocated to a node.

Creating A Cluster

Now that we understand the basics of Elasticsearch, the crucial part is to build a fast and highly available cluster, that in itself raises few questions. These are one of the very common questions asked by architects and almost every time you are likely to get a response as "It Depends!". With the generic considerations we come to a conclusion by asking below question to ourselves.

Should I use Dedicated Master?

The whole purpose of a dedicated Master is to manage the cluster, from the state of the cluster to schema of indices and location of shards etc. If you have only one node in your cluster and data increases dramatically, the node might not respond to requests and cluster may become unstable.

Considering the cost parameter you can always choose to avoid using dedicated master for low volume requirements like non-production cluster. But it is always recommended to use a dedicated master for production workloads for better stability and high availability.

How many data nodes do I need?

First thing is you need to figure out the storage you are going to need based on the workloads. There are mainly two types of workloads in Elasticsearch.

- Single index workloads are external data source with all of the contents that is put inside a single index for search and index is updated whenever there is update to the source.

- Rolling index workloads on the other hand receives data continuously. Generally the indices are created on daily basis and older indices are deleted after the retention period.

In case of Single index workloads it is easy to determine how much data you have, but in case of Rolling index workloads you have to find out how much data flows daily and what's the retention period. you can start off with a simple formula

- Storage Needed = Source Data x Source:Index Ratio x (Replicas + 1)

- Nodes Needed = Storage Needed / Storage per data node

Note- Source:Index Ratio(compression ratio) = 1 and replica count is 0-based

Lets take an example for Rolling index workloads, you receive 100 GB of data daily and the retention period is seven days. You have one replica that gives us 200 GB per day. So for seven days you will need 1400 GB storage. If you choose m5.large.elasticsearch instance with 512 GB General Purpose EBS volume, then by the above formula we would need at least three nodes in the cluster.

Similarly by knowing the source data, compression ratio and replica count you can decide how many data nodes you need in your cluster.

How many shards do I need?

When your node is not large enough to handle the index size, the index is split across shards. It not only distributes the load but also parallelize the processing of documents. To begin with you can set number of shard count using below formula.

- Number of Shards = Index Size / 30GB

In the previous example of Rolling index workloads, we had index size of 100 GB, that means we would need four shards.

More shards doesn't always mean better performance. If your index is less than 30 GB, you choose to use only one shard.

In future as you observe the workload in the cluster, you can adjust shard count and get the best out of Elasticsearch.

What went wrong for me

One thing that comes to mind before provisioning any resource in AWS is how much that's going to cost us and how we can reduce the cost? Of course there is no way we can compromise on production account's Elasticsearch cluster.

But for non-production workload we can save a lot by using bare minimum configurations because that does not have any major business impact.

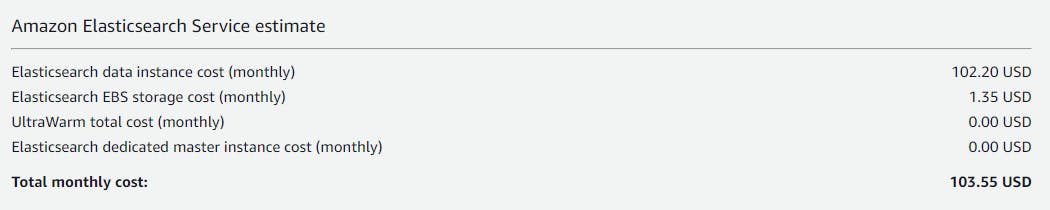

Amazon Elasticsearch Service is billed according to instance hours, EBS Storage and data transfer. An m5.large.elasticsearch instance with 2 vCPUs and 8GiB of memory costs 0.142 USD per hour. An EBS volume of 10 GB without a dedicated master would roughly estimates to -

The same thing happened, we decided to use a single node cluster without clear understanding of the bottlenecks of single node cluster.

It was working fine in early days but soon the data ingestion increased and we started observing that the logs were missing for some time on random days with no pattern to detect what was going wrong. There were no errors in the flow that shipped CloudWatch logs to the cluster. We kept facing the outage until there came another issue in, Kibana. it started giving out error to new users who tried to login, the error was -

- 'this action would add [n] total shards, but this cluster currently has [N]/[N] maximum shards open'

When I checked for cluster health, it clearly shows cluster status as yellow with huge number of shards as unassigned.

{

"cluster_name" : "AccountID:ES-Cluster",

"status" : "yellow",

"timed_out" : false,

"number_of_nodes" : 1,

"number_of_data_nodes" : 1,

"discovered_master" : true,

"active_primary_shards" : 501,

"active_shards" : 501,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 499,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 50.1

}

Upon checking for cluster state, it was observed that the cluster was using default settings because we didn't mention number shards per node or the replica count specifically. In case of default settings each index was distributed across five primary shards and each shard has one replica.

"settings" : {

"index" : {

"opendistro" : {

"index_state_management" : {

"policy_id" : "delete_indices_older_than_15d"

}

},

"number_of_shards" : "5",

"provided_name" : "index-name",

"creation_date" : "1111111111",

"number_of_replicas" : "1",

"uuid" : "12fedsaertr42341",

"version" : {

"created" : "545634"

}

}

}

Because we had only one node in the cluster, there was no way for the replica of primary shard to be assigned to another node. And there are 1000 shards in a single node by default. As the indices grew and primary shard count crossed 500, meaning, including replica of each primary we were already using all the 1000 shards available in single node cluster. Because we had a retention policy of 15 days, that helped deleting older indices periodically and ultimately reducing the unassigned shards count, we would be back on normal behavior of the cluster.

With this error, we could at least knew the cause of outage and new users unable to login to Kibana Dashboard issue. There were few options to fix this issue of unassigned shards -

1. Very obvious option was add another node, so that the replica shards can be allocated to that node. But this would also double the cost for Elasticsearch cluster. And for development environments its not the best option as you have think of cost as well.

2. Increase the number of shards in the cluster, but this also means as the indices will grow you will consume more primary shards. Those primary shards will add respective replicas and unassigned shards will keep on increasing and eventually you will end up with same problem as before. At the same this option might impact the performance of search operation. So this was not a viable option.

3. The best option possible was to set replica count to 0 at the time of index creation itself. So that we always had enough number active shards in single node with no shard as unassigned and the cluster status as Green.

Conclusion

By creating the indices with replica as 0 with additional setting of less shards per node and retention policy to lesser number of days, we were able achieve a stable single node Elasticsearch cluster in a non-production account in a cost effective manner. But for production level workloads it is always recommended to use multiple data nodes with a dedicated master for a stable cluster.

References

opendistro.github.io/for-elasticsearch-docs..

aws.amazon.com/blogs/database/get-started-w..

aws.amazon.com/blogs/database/get-started-w..